As a fan of smaller schools, much as it pains me to write, I find that with respect to student performance, the size of the school does not matter at all. At least in Madison.

After reading this article from the WSJ, I became curious about the expert's statement that "Research so far, Odden said, fails to show a clear link between achievement and school size, particularly within the range of sizes in Madison." Frankly, I didn't believe him.

So I dug into the data and, it turns out, given the limited amount of data I used, it seems there is absolutely not correlation between school (or in this case, class) size and test performance.

Now that is not to say small schools should be eliminated. There are other positive attributes of small schools that I have not measured here. I'm only looking at (as I've referred to many times) the average total percentage of non economically disadvantaged students who achieve in the "advanced" score category for the statewide tests.

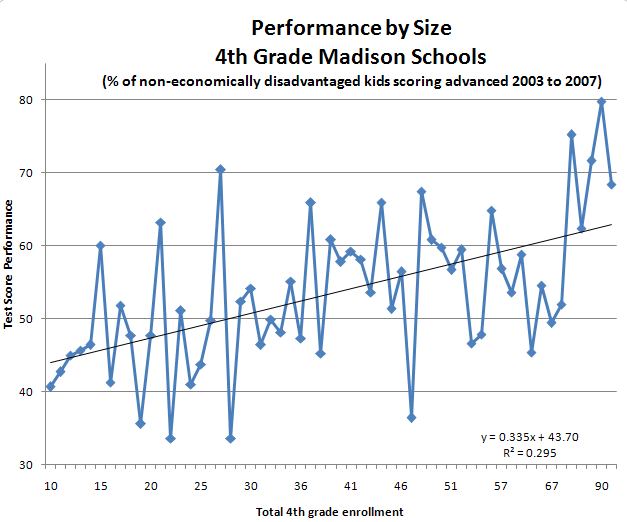

First try - Positive Correlation

Here's my first analysis. You can see what looks like a mild correlation between 4th grade class enrollment and performance. That is, the larger the school, the better the performance. It's pretty zigzaggy and the R-squared value is .295 which isn't exactly highly correlated but it is somewhat correlated.

Then I began to think, hmm... the newer schools are bigger and they tend to be in more affluent areas hence we might be seeing simply a correlation to location rather than really to size. Hence my next analysis.

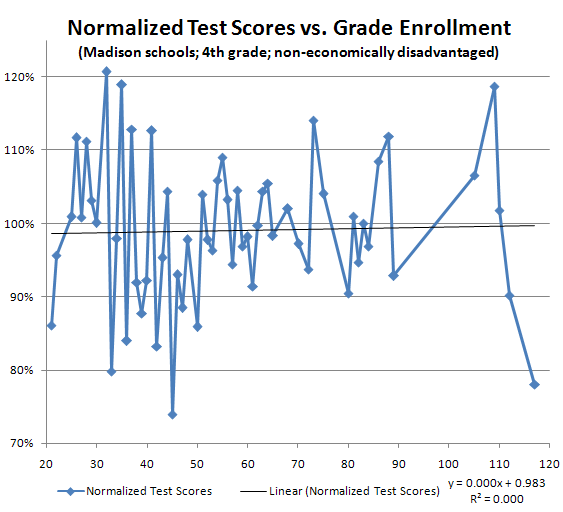

Right Try - No Correlation

What I figured is that the test scores had to be normalized somehow to the school itself. We can't compare a big new suburban Madison school to an old urban school, right? We really can only properly compare scores within a school to itself.

Fortunately class sizes change within schools. So what I did was normalize the test scores to the schools' average test score. So the graph below shows test scores as percentages above or below the individual school's average plotted against the 4th grade enrollment.

E.g. a point at a grade enrollment of 30 with a value of 120% indicates that for some school having an enrollment of 30, they had a test score that was 20% higher than their average test score.

You can see now that the correlation (via the R-squared) is exactly 0.0. There is no relationship between school size and test performance.

Understand the differences and quality of Madison-area schools. The posts are in reverse order so to understand this best, you might want to read from the oldest first using the archive links below.

Monday, October 22, 2007

Wednesday, October 17, 2007

School District Shoot-out

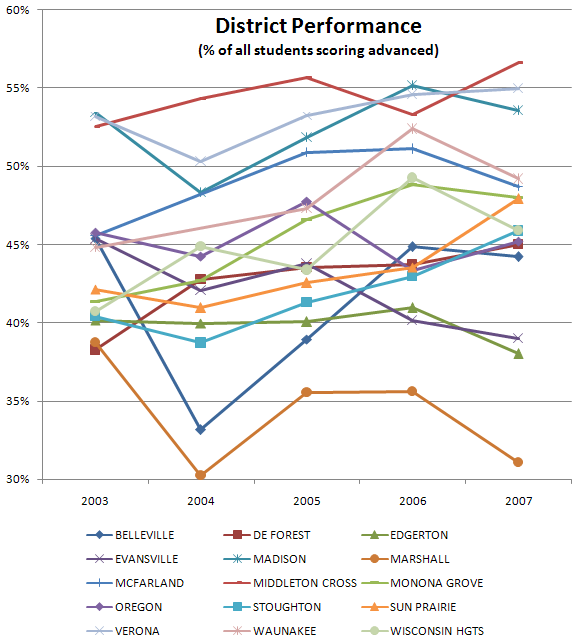

Oh alright, I shouldn't have entitled this a "shoot-out" I guess but that's what I'm calling an over time comparison of the local districts. The comparison shown below shows, for each district and year, the percentage of non-economically disadvantaged students across all grades and all tests scoring advanced.

Winners

I think the clear winners really are Madison, Middleton-Cross Plains, and Verona. I say that because their score lines sit above the rest in a sort of little group. They cross each other but not any of the lines below. And you can see how they're clustered at a high level in both 2006 and 2007.

Trends

One thing this graph shows is how districts are faring over time. 5 years isn't a long time but there seem to be a few trends (or non-trends as the case may be). I did a linear regression on the data points which may or may not be valid but it's better than eyeballing and actually contradicts eyeballing in several cases.

The district making the most progress with regard to increasing scores is Monona Grove. I think that's pretty evident in the chart. Of course starting low makes that easier, right?

The district making the worst progress is Evansville which is making a relatively steep descent. The only other districts heading down in scores are Oregon (very slight), Marhsall and Edgerton.

Sun Prairie is heading upward pretty well also. The rest of the districts are heading upward at a fairly slow pace and you can see that on the chart.

Test Quality

I'm sure the test creators analyze their test quality to no end but there doesn't appear to be a clear simultaneous bump up or bump down for any particular year. I would expect, if the tests were poorly designed, that one year might be easier than another and you'd see all districts going up and down simultaneously. Not so, apparently.

Winners

I think the clear winners really are Madison, Middleton-Cross Plains, and Verona. I say that because their score lines sit above the rest in a sort of little group. They cross each other but not any of the lines below. And you can see how they're clustered at a high level in both 2006 and 2007.

Trends

One thing this graph shows is how districts are faring over time. 5 years isn't a long time but there seem to be a few trends (or non-trends as the case may be). I did a linear regression on the data points which may or may not be valid but it's better than eyeballing and actually contradicts eyeballing in several cases.

The district making the most progress with regard to increasing scores is Monona Grove. I think that's pretty evident in the chart. Of course starting low makes that easier, right?

The district making the worst progress is Evansville which is making a relatively steep descent. The only other districts heading down in scores are Oregon (very slight), Marhsall and Edgerton.

Sun Prairie is heading upward pretty well also. The rest of the districts are heading upward at a fairly slow pace and you can see that on the chart.

Test Quality

I'm sure the test creators analyze their test quality to no end but there doesn't appear to be a clear simultaneous bump up or bump down for any particular year. I would expect, if the tests were poorly designed, that one year might be easier than another and you'd see all districts going up and down simultaneously. Not so, apparently.

Monday, October 15, 2007

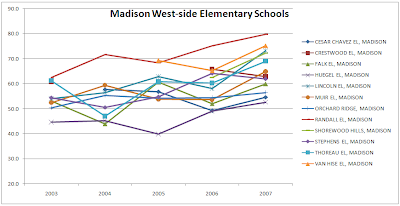

Madison Elementary Schools' Progress

What's happening with Madison elementary schools over time?

Well, it's not that clear. The first graph below shows west-side elementary schools progress over time with respect to my measurements. It does sort of seem they are doing better. Generally speaking the lines trend upward especially in the 2007 data point. The way they all trend upward for 2007 makes me think there is something not comparable between the 2007 and 2006 tests.

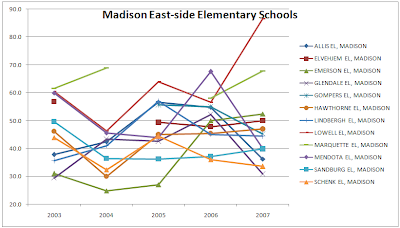

The second graph shows east-side elementary schools. It's a lot more zig-zaggy than the west-side ones. Three, in particular, really shoot down for 2007: Sandburg, Mendota, and Glendale. I wonder why that is? Those are precipitous drops. Looks to me like you want your kids at Lowell or Marquette which really seem pretty good on this chart (and, of course according to the table in my previous post).

Well, it's not that clear. The first graph below shows west-side elementary schools progress over time with respect to my measurements. It does sort of seem they are doing better. Generally speaking the lines trend upward especially in the 2007 data point. The way they all trend upward for 2007 makes me think there is something not comparable between the 2007 and 2006 tests.

The second graph shows east-side elementary schools. It's a lot more zig-zaggy than the west-side ones. Three, in particular, really shoot down for 2007: Sandburg, Mendota, and Glendale. I wonder why that is? Those are precipitous drops. Looks to me like you want your kids at Lowell or Marquette which really seem pretty good on this chart (and, of course according to the table in my previous post).

Monday, October 8, 2007

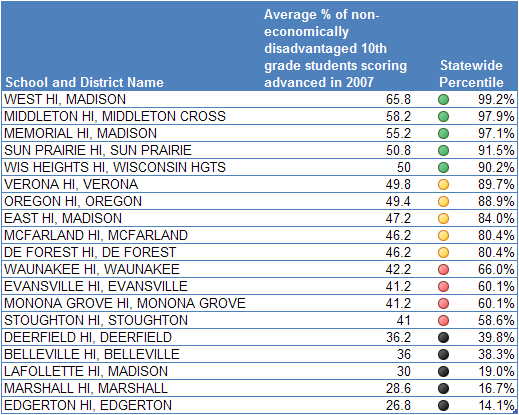

High Schools

Put that in your Pipe and Smoke It

For those folks so enamored of the various suburban "great schools" you should make sure you look at the facts. Specifically, I'm pretty amazed at the relatively poor performance of Waunakee HS. It's down in the 66th percentile statewide. That's not terrible but it's not a super-dooper school either. Contrast it with West HS which is in the 99th percentile statewide and, in fact, the 4th best high school in Wisconsin (according to my analysis, etc, etc)

Anyway for the most part, the high schools do pretty well. I would say anything above 80th percentile statewide is pretty good. Who knew that Wisconsin Heights HS was so good? I don't even know where it is! It got on this list because the district was in the area. It probably helps that they only have something like 95 students in 10th grade.

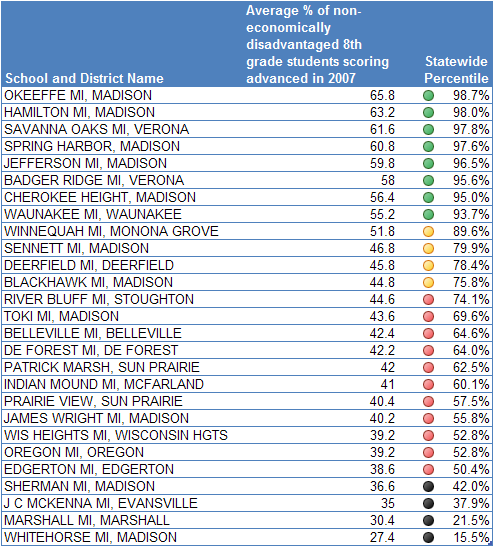

Amazing Facts

So what's kind of interesting to me is the high performance of Sun Prairie High School compared with the middling (at best) performance of students in Sun Prairie middle schools. Their two middle schools I have data for were hanging around at about 60th percentile statewide for 8th grade tests.

Then BOOM! the students get smart 2 grades later and push their high school scores to 92nd percentile. Of course this is a snapshot so the student groups are different. So perhaps the bad 8th grade scores we see will translate to bad 10th grade scores in 2 years. Or perhaps Sun Prairie HS is really, really good at educating.

For those folks so enamored of the various suburban "great schools" you should make sure you look at the facts. Specifically, I'm pretty amazed at the relatively poor performance of Waunakee HS. It's down in the 66th percentile statewide. That's not terrible but it's not a super-dooper school either. Contrast it with West HS which is in the 99th percentile statewide and, in fact, the 4th best high school in Wisconsin (according to my analysis, etc, etc)

Anyway for the most part, the high schools do pretty well. I would say anything above 80th percentile statewide is pretty good. Who knew that Wisconsin Heights HS was so good? I don't even know where it is! It got on this list because the district was in the area. It probably helps that they only have something like 95 students in 10th grade.

Amazing Facts

So what's kind of interesting to me is the high performance of Sun Prairie High School compared with the middling (at best) performance of students in Sun Prairie middle schools. Their two middle schools I have data for were hanging around at about 60th percentile statewide for 8th grade tests.

Then BOOM! the students get smart 2 grades later and push their high school scores to 92nd percentile. Of course this is a snapshot so the student groups are different. So perhaps the bad 8th grade scores we see will translate to bad 10th grade scores in 2 years. Or perhaps Sun Prairie HS is really, really good at educating.

Friday, October 5, 2007

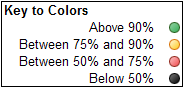

Dane County Middle Schools 2007 Rankings

So you know when I say 2007 rankings, the test data is from 2006, right? Anyway again comparing only non-economically disadvantaged students, you can see 6 of the top 10 are Madison schools; both Verona schools are up in the top 10 as is Monona and Waunakee.

In general, I would say that the middle schools of the area seem to be doing pretty well. There are a couple duds way down at the bottom but you'll notice a lot fewer black circles than in my previous post on area elementary schools.

Of course I wouldn't say being in the 50th percentile statewide is any great accomplishment but, hey, at least it's not Whitehorse down in the 16th percentile there.

Something to take note of is that O'Keefe and Sherman have been merged. Some of Sherman's students are at O'Keefe and some at another school. I'm sure we can expect the excellent score of O'Keefe to descend precipitously as we average the 36.6 from Sherman with the 65.8 of O'Keefe to get, what, 51.2 dropping the best middle school down to 7th place or something.

You know the MMSD board really needs to be more aware of perception versus education. It is true that the goal is to educate all children highly. It is also true that that ain't gonna happen! One thing they can do something about is managing perceptions. Is it better to say, MMSD has the 6th best middle school in the state (non-poor, etc, etc) and a bad school? Or is it better to say MMSD has some above average middle schools? My thought is the prior. People demand excellence not above averageness.

Anyway again can anyone explain how Whitehorse can be so bad while O'Keefe can be so good?

In general, I would say that the middle schools of the area seem to be doing pretty well. There are a couple duds way down at the bottom but you'll notice a lot fewer black circles than in my previous post on area elementary schools.

Of course I wouldn't say being in the 50th percentile statewide is any great accomplishment but, hey, at least it's not Whitehorse down in the 16th percentile there.

Something to take note of is that O'Keefe and Sherman have been merged. Some of Sherman's students are at O'Keefe and some at another school. I'm sure we can expect the excellent score of O'Keefe to descend precipitously as we average the 36.6 from Sherman with the 65.8 of O'Keefe to get, what, 51.2 dropping the best middle school down to 7th place or something.

You know the MMSD board really needs to be more aware of perception versus education. It is true that the goal is to educate all children highly. It is also true that that ain't gonna happen! One thing they can do something about is managing perceptions. Is it better to say, MMSD has the 6th best middle school in the state (non-poor, etc, etc) and a bad school? Or is it better to say MMSD has some above average middle schools? My thought is the prior. People demand excellence not above averageness.

Anyway again can anyone explain how Whitehorse can be so bad while O'Keefe can be so good?

Labels:

madison,

middle schools,

mmsd,

performance,

quality,

schools,

wisconsin

Wednesday, October 3, 2007

Dane County Elementary Schools Re-ranked

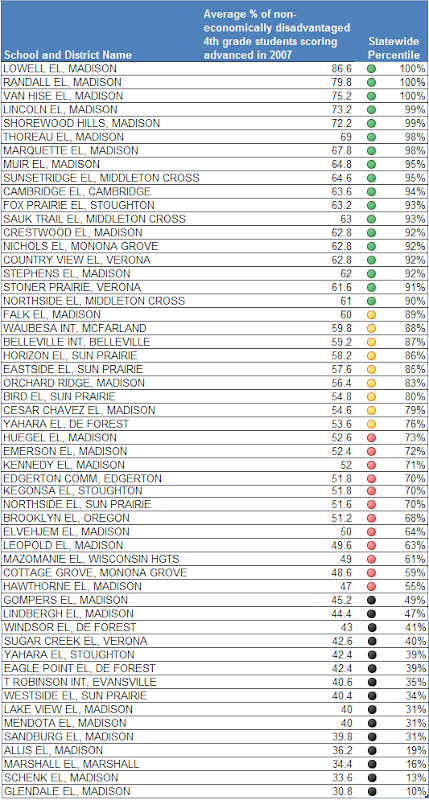

-The 2007 state testing data is out and I thought I'd take another look. Again I'm looking here at Dane County area schools only compared with each other and state-wide as well. The data you will see only includes non-poor students -- you can read more about why below.

Some Madison Elementary Schools are Tops

As you can see, Madison schools are simultaneously excellent and terrible. The top 8 are MMSD schools as are 6 of the bottom 10. Wow!

Not only does MMSD have top elementary schools in the area but the top 8 are above the 95% percentile statewide. That means those 8 schools are better (with respect to my measurements) than 95% of the other elementary schools in Wisconsin.

Furthermore, MMSD schools Lowell, Randall, and Van Hise are the #1, #2, and #3 elementary schools STATEWIDE. Yes you heard right. According to my ranking those are the 3 best elementary schools in the state for non-poor students.

Of the top 25 schools statewide, 7 are MMSD schools. No other area schools make the top 25.

You might consider moving to one of those attendance areas because these schools and the students in them are really, really good.

Other Madison Schools are the Pits

6 out of 10 of the worst area schools are also MMSD schools. WTF? These aren't poor kids scoring so badly either. I sliced and diced the data and couldn't find any demographic explanation for the bad scores. For those bad schools, no demographic group has a significantly higher score than the average shown (not white, asian, or black, kids, girls, boys, nothing). The only explanation can be that some of those schools are truly terrible.

How did I analyze the data?

The data are essentially the same as described in this post except for "Assumption 3". This time instead of selecting white student's data as a proxy for socio-economic class, I took a poster's advice and used data for students who are not "economically disadvantaged." You can read about what defines that here.

The reason I'm restricting to the non-poor is to compare apples-to-apples. Averages across all students don't really tell the story. It's sort of like saying that Medina, WA with a population of about 3,000 and the home of Bill Gates, has an average per-capita net worth of $19,000,000. That's sort of silly, right? Poor students are much more challenging to educate and thus skew averages for districts with no poor students higher.

So the short story can be seen in the table below. To explain the numbers, for example, Marquette Elementary in Madison had an average across all subject tests of 67.8% of its (non-poor) students test in the "Advanced" ranking. That 67.8% puts Marquette in the 98%-th percentile of elementary school state-wide. That is to say, Marquette has higher scores than 98% of all the other elementary schools in the state.

Where's my school?

You might notice your school not on this list. Waunakee, for example, does not report any data for poor versus non-poor students. Perhaps that's because no elementary school students qualify for free lunch? If you don't see your school on the list, they did not report the non-poor category of students.

Also, I've restricted my analysis to the following districts: Belleville, Cambridge, De Forest, Deerfield, Edgerton, Evansville, Madison, Marshall, McFarland, Middleton-Cross Plains, Monona Grove, Oregon, Stoughton, Sun Prarie, Verona, Waunakee, and Wisconsin Heights.

Some Madison Elementary Schools are Tops

As you can see, Madison schools are simultaneously excellent and terrible. The top 8 are MMSD schools as are 6 of the bottom 10. Wow!

Not only does MMSD have top elementary schools in the area but the top 8 are above the 95% percentile statewide. That means those 8 schools are better (with respect to my measurements) than 95% of the other elementary schools in Wisconsin.

Furthermore, MMSD schools Lowell, Randall, and Van Hise are the #1, #2, and #3 elementary schools STATEWIDE. Yes you heard right. According to my ranking those are the 3 best elementary schools in the state for non-poor students.

Of the top 25 schools statewide, 7 are MMSD schools. No other area schools make the top 25.

You might consider moving to one of those attendance areas because these schools and the students in them are really, really good.

Other Madison Schools are the Pits

6 out of 10 of the worst area schools are also MMSD schools. WTF? These aren't poor kids scoring so badly either. I sliced and diced the data and couldn't find any demographic explanation for the bad scores. For those bad schools, no demographic group has a significantly higher score than the average shown (not white, asian, or black, kids, girls, boys, nothing). The only explanation can be that some of those schools are truly terrible.

How did I analyze the data?

The data are essentially the same as described in this post except for "Assumption 3". This time instead of selecting white student's data as a proxy for socio-economic class, I took a poster's advice and used data for students who are not "economically disadvantaged." You can read about what defines that here.

The reason I'm restricting to the non-poor is to compare apples-to-apples. Averages across all students don't really tell the story. It's sort of like saying that Medina, WA with a population of about 3,000 and the home of Bill Gates, has an average per-capita net worth of $19,000,000. That's sort of silly, right? Poor students are much more challenging to educate and thus skew averages for districts with no poor students higher.

So the short story can be seen in the table below. To explain the numbers, for example, Marquette Elementary in Madison had an average across all subject tests of 67.8% of its (non-poor) students test in the "Advanced" ranking. That 67.8% puts Marquette in the 98%-th percentile of elementary school state-wide. That is to say, Marquette has higher scores than 98% of all the other elementary schools in the state.

Where's my school?

You might notice your school not on this list. Waunakee, for example, does not report any data for poor versus non-poor students. Perhaps that's because no elementary school students qualify for free lunch? If you don't see your school on the list, they did not report the non-poor category of students.

Also, I've restricted my analysis to the following districts: Belleville, Cambridge, De Forest, Deerfield, Edgerton, Evansville, Madison, Marshall, McFarland, Middleton-Cross Plains, Monona Grove, Oregon, Stoughton, Sun Prarie, Verona, Waunakee, and Wisconsin Heights.

Labels:

elementary schools,

madison,

performance,

quality,

schools,

wisconsin

Subscribe to:

Posts (Atom)